Inference engines are algorithms that take an existing RDF model and infer (derive) new information from it. For example, an RDF model could define a rule that derives the grand parents of a person based on the parents of the parents. TopBraid products include a SHACL inference engine for running SHACL rules as well as a SPIN inference engine called TopSPIN. Other inference engines may be domain-specific and can be defined by third parties through TopBraid's plug-in mechanism.

For either SHACL or SPIN inferencing to work, the graph you are running inferences on must include some SHACL or SPIN rules.

This is typically accomplished by importing (through owl:imports) a graph containing rules.

You will find in TBC workspace under TopBraid/SPIN a file called owlrl-all.ttl.

This file contains SPIN implementation of the OWL-RL profile.

Include it in your graph if you want to produce inferences specified in the OWL-RL profile.

There is a convenience feature on the Profile tab of your ontology (press the 'home' button) to activate OWL-RL.

From an RDF point of view, an inference engine takes input triples and adds inferred triples. In TopBraid Composer, the inferred triples are stored in a dedicated Inference Graph. Each graph you will work with in TBC has its own Inference Graph that will contain triples generated by running inferencing.

The Inference menu provides the following main features, some of which are also accessible via buttons on the tool bar:

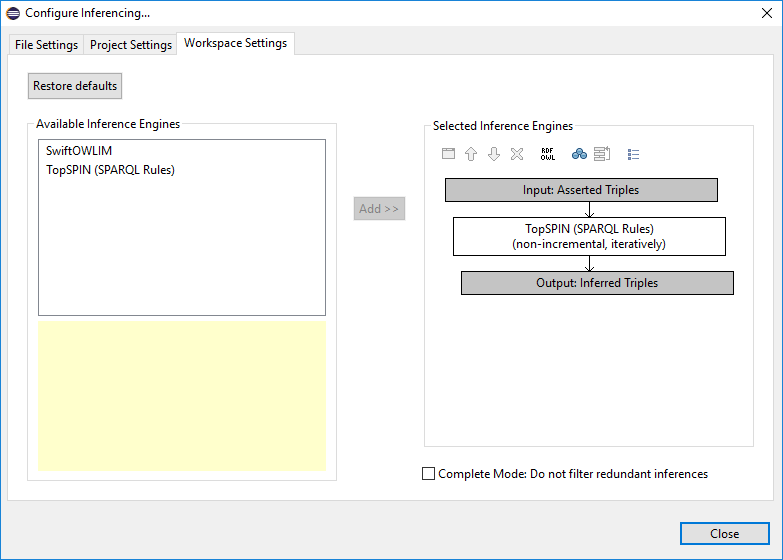

Inferencing can be configured either per file (graph), per project, or for the whole workspace (i.e., global settings). As a default, the global setting is to use SHACL and TopSPIN, but this setting can be changed depending on the file or project. The following screenshot (brought up by clicking Inferences -> Configure Inferencing...) shows these default settings:

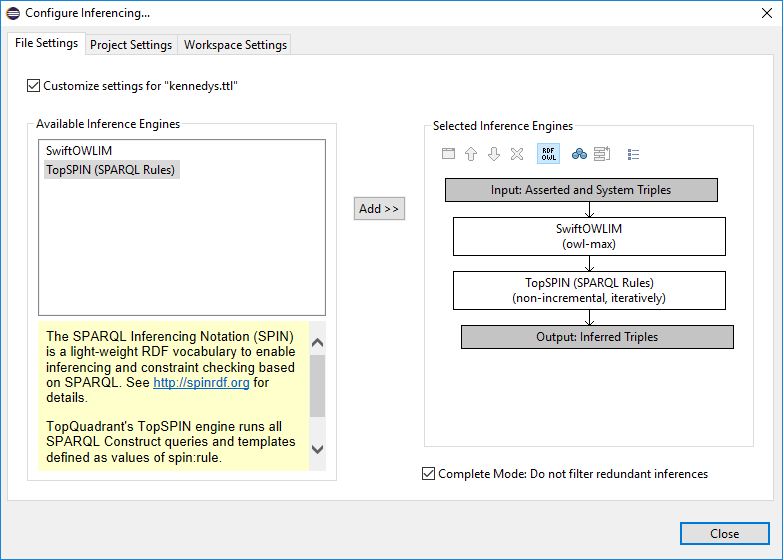

In the configuration dialog you see the list of available engines on the left, and a graphical display of the selected engines on the right. Depending on your TopBraid installation and version, you may see additional (or less) inference engines. Click on an engine on the left to see its description. To put it into the selection on the right, either double-click on it or use the Add >> button.

The graph on the right hand side illustrates the kind of inferencing that is executed, and the order in which engines are chained together. Chaining means that the output of one step is taken as input to the next step. In the following example (with a screenshot from an older TopBraid version), the inference engine takes the asserted triples as input, then executes Swift-OWLIM, and then runs the selected Jena rules over the triples that were inferred by Swift-OWLIM. In this case, many additional rules fire, leading to more results:

There are various additional options to fine tune the inferencing process:

rdf:type statements if the instance also has a subclass of the type as one of its rdf:types.rdfs:subClassOf statements of inconsistent classes (except with owl:Nothing).rdfs:subClassOf statements of anonymous classes.rdfs:subClassOf statements with anonymous classes (without restrictions).rdfs:subClassOf statements with immediate superclasses which are also transitively related via rdfs:subClassOf.rdfs:subClassOf statements with owl:Thing if another superclass exists.owl:disjointWith statements with inconsistent classes.owl:disjointWith statements between subclasses and superclasses.owl:equivalentProperty statements between subproperties.

Trivial Inferences

While you are editing, Composer maintains some "trivial" inferences which are updated in real-time. Common to those inferences is that they are relatively easy to perform (by a tool) and help users understand the effects of an assertion. The only currently supported trivial inference is: